I will explain the most commonly used AI terms that you may often hear but might not fully understand. This article covers understanding of terms such as RAG, GenAI, LLM, Guardrails, LLaMA, LoRA, Agents that every developer should know. This article is aimed at beginners.

I will explain one by one in layman’s language:

1. LLM

LLM stands for Large Language Model. Large is termed because a huge set of data or text is provided to the model in order to generate a specific output. It tries to represent the answer in the structure of human language.

LLM is like autocomplete; you give text as input, known as a prompt, and it predicts the next perfect word to complete the sentence. LLM is static; it does not search real-time on the web and predict, instead, it keeps on learning from the web, stores the data and then answers the prompt.

Examples: OpenAI ChatGPT, Google Gemini, Claude, Grok, Perplexity, Meta’s Llama and many more are entering the market.

2. SLM

SLM stands for Small Language Model. Functionality-wise, it works the same as LLM; the difference is that the model is trained on a very limited and specific dataset. It is cheaper and faster to run. It is used to gain information from the narrow tasks like FAQs, which were trained on pre-existing data.

Also, this is used on mobile phones when you start typing text and get the next suggested words. This is SLM running on the device.

Example: If you ask how to remove payment information, SLM replies with Go to Account Settings -> Payment Methods -> Choose Card to remove.

3. VLM

VLM stands for Vision Language Model. Vision represents whatever our eyes can “see”. It’s an AI system that combines Image and Text and responds like a human.

More Examples: Describing an image like Image Captioning, Reading bills and forms using OCR(Optical Character Recognition), Self Driving Vehicles, Reading X-Rays and doctors not in the Healthcare system.

4. Multimodal AI

Let’s understand with a simple example: In order to understand a movie, we see the scene (video), we hear the dialogues (audio), we read the subtitles (text) and combine them all to conclude the movie context.

Real world Examples:

Google Lens

→ Take photo + ask question

Apple Face ID

→ Camera + sensors

Tesla Self-driving

→ Cameras + sensors + maps

AI assistants

→ Voice + text + screen understanding

5. Generative AI (GenAI)

AI thinks, GenAI creates. Consider a student read thousands of books, seen thousands of images, and listened to thousands of conversations. Now, if you ask that person to “write a story” and the student creates something new and not copied. That’s Generative AI.

GenAI is an AI that creates new content such as text, images, code, audio, or video based on user given prompt.

Example: Image generator using MidJourney, Chatbots, Design, Music, Video tools, etc.

6. Agentic AI

Agentic AI doesn’t answer your queries; it takes direct actions. Consider you give a prompt to the personal assistant AI: “Book the cheapest flight for me from New York to Los Angeles and give me booking details“.

The working of Agentic AI will be like:

1. Finds flights

2. Compares prices

3. Books ticket

4. Email you details

5. Stops when the task is done

Example:

Dev agents like copilot on integrated on microsoft visual code.

Customer support agents, resolving tickets end-to-end.

Auto research assistants that search, reads and summarize.

7. Autonomous Agents

Autonomous agents are AI that can decide for themselves what to do next and keep working without asking again and again. It focuses mainly on the outcome and not on the instructions.

Example: Self-driving cars see roads, traffic, and front vehicles and keep changing speed based on the environment.

Coding agents write, check and fix automatically.

Trading bots buy/sell based on the market signals.

8. RAG

RAG (Retrieval Augmented Generation) is like, instead of answering from the memory, it looks for the real source and gets the right information. Then uses that information to generate the final answer. So no guessing works in RAG.

How does RAG work?

– You ask a question in the form of a prompt

– AI searches documents (PDFs, DB, knowledge base, whatever is stored)

– Finds most relevant chunks

– Sends them to the language model

– Model writes an answer based on those facts

Example:

Company internal chatbots are likely to respond when asked “what is our leave policy?”.

Legal document Q&A.

A banking support bot that can answer queries from a lot of documents.

9. Vector Database

Vector Database is a type of design that stores and indexes the data, on which we can query our data. So the result does not come from an exact match, but it brings up results that have some related meaning, which we term as semantic meaning.

To understand the user intent, it uses Embeddings, which converts into a long list of numbers called a Vector [0.11, -0.77, 0.54, …….].

Vector DB’s are commonly applied with RAG.

Also used in recommendation systems, e-commerce product search, and image search.

Example: If you search for “which is the latest movie on Netflix?”, the model will calculate the mathematical distance between your query and the movie. The lesser the distance, the more exact the answer will be.

Two of the metrics used for distance calculate:

Cosine Similarity: Measures the angle between two vectors. (focuses on meaning)

Euclidean Distance: Measures the straight line between the two vectors.

10. Embeddings

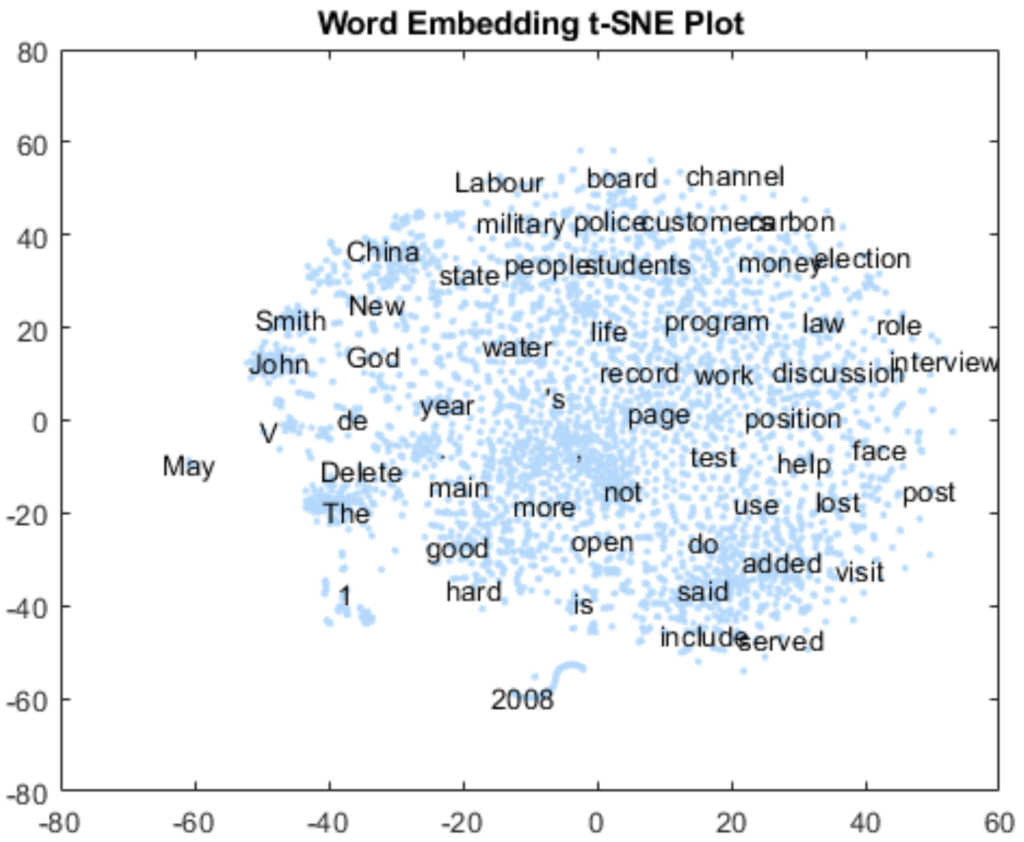

Embeddings are a way to convert text, image, video, and audio into numbers and make predictions based on those numbers only. It looks something like the image below, which is an embedding space. Similar meaning words are kept together so that even if you query some text, this will still provide you with the related information.

There are thousands and tons of dots/numbers generated that we humans can’t read or understand; only machines can do.

It is used in semantic search, RAG, chatbots, duplicate detection, and recommendation engines.

11. Semantic Search

Semantic search means searching for the query, not exactly, but with some related meaning. Instead of searching for the exact keyword, it tries to understand what you actually want.

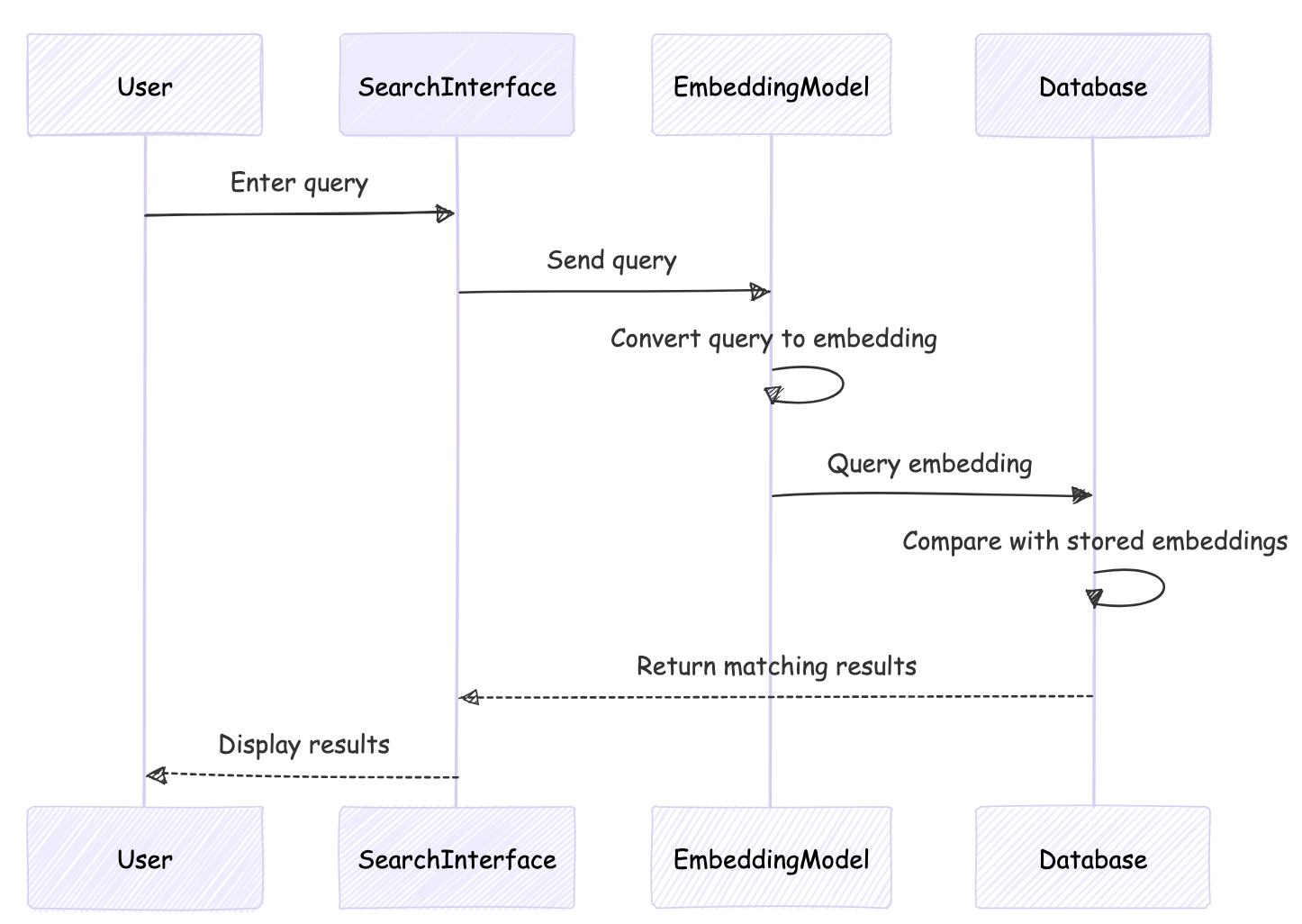

Here is the flow diagram of Semantic search:

How the semantic search works (high-level)

- Text → Embeddings

Your query is converted into an embedding (numbers that internally represent meaning) - Compare meanings

That embedding is compared with stored embeddings - Similarity scoring

The system finds text with closest meaning - Return best matches

Results ranked by semantic similarity

12. Knowledge Graph AI

Knowledge Graph AI is a way that stores the data in the form of Maps instead of Lists likes text rows in a table. It creates relationships between two entities.

Example: Instead of storing “Washington is the Capital of the USA”, the knowledge graph will store it as Washington ──(capital_of)──▶ USA

Here are the building blocks and terms used:

A Knowledge Graph has only three things:

- Entities – real-world things

(Company, Person, City, Product) - Relationships – how entities are connected

(capital_of, located_in, works_at) - Attributes – properties of entities

(name, population, date)

This is also called Triples.

13. Context Window

Consider Context Window as the AI’s thinking memory. It is the maximum amount of text that AI can see and remember at a time.

AI does not calculate text words; it counts tokens.

Word “Artificial Intelligence” ≈ 2-3 tokens

So an 8K context window ≈ is 6,000–8,000 words (roughly).

If the tokens exceed the limit, AI may forget the older conversation, and the answers will become inconsistent.

14. Transformer

Transformer is an AI architecture that focuses on Attention. That allows models like chatbots to understand the context, relationships, and meaning all at once.

After looking at all at once, it decides what matters the most.

Let’s understand this with an example:

“I went to the bank to withdraw money.”

The word bank pays attention to: withdraw and money

Not to the river and water

Understand simple analogies and try to relate the behaviour:

Imagine a round-table meeting:

- Every word listens to every other word

- Important words speak louder

- Meaning comes from interaction

That’s a Transformer.

15. Encoder-Decoder Architecture

It is an AI design in which one part understands the input and another part responds with an output. In simple terms:

Encoder = understands

Decoder = responds

It is mainly used in :

Language translation

Speech-to-text

Question answering

Text summarization

16. LLaMA

LLaMA stands for Large Language Model Meta AI. It is created by Meta AI under the family of open-weight large language models. It is an alternative to OpenAI in order to help developer to run and build their own model.

You can run it locally, on your own server, or in the cloud. You often hear LLaMA, LLaMA 2, and LLaMA 3. With each generation, it improves Reasoning, Context understanding, and multilingual ability. If you want a ChatGPT-like response but self-hosted, LLaMA is a top choice.

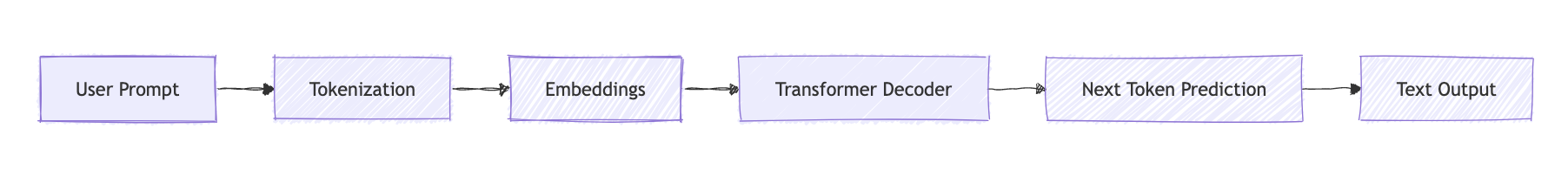

High level flow chart of LLaMA working:

17. Prompt Engineering

Prompt Engineering is the skill of asking the AI in the right way so it gives better, more accurate and more useful answers.

Analyze the following example:

If you tell a person:

“Explain AI” → vague answer

“Explain AI to a beginner in 5 bullet points with examples” → clear answer

Same AI. Better instruction. Better understanding.

18. Chain-of-Thought

Chain of Thought means asking the AI to give step-by-step reasoning instead of a direct answer.

Let’s take a simple example:

If you ask a person:

- “What’s 15% of 200?” → “30”

- “Show how you calculate 15% of 200” → “First find 10%, then 5%, then add…”

Chain-of-Thought is that step-by-step explanation.

High-Level Working:

- You ask the model to give reasons step by step

- The model generates some of the intermediate thoughts

- The final answer is based on that reasoning

19. Guardrails

Guardrails act as a protection for the responses from AI, so that it gives safer and more predictable responses.

Guardrails are important to implement in a system because without them, it can give harmful advice, may leak sensitive information, hallucinate facts, go off topic, and may break company rules.

Where can we implement guardrails?

Guardrails can be applied at multiple points:

- Input guardrails – check user prompts

- System guardrails – define allowed behavior

- Output guardrails – filter out responses based on defined rules.

- Tool guardrails – restrict any actions/tools

20. Hallucination

Hallucination happens when AI is very confident about the answer, but actually, it is wrong and far from the original facts.

Imagine a student who:

- Doesn’t know the answer

- Still writes something that sounds correct

That’s exactly how AI hallucination feels.

Why AI Hallucinates?

So what happens, AI gives more focus to the next word and does not check the real facts. And AI prefers to answer instead of replying as “I don’t know”.

21. Model Drift

Model Drift happens when the model is trained on old data, but when real-world changes occur, the performance gets degraded. And the expected response doesn’t match the current requirement.

Why the model drift happens?

There can be many factors like User behaviour on the website changes, market condition shifts, the COVID duration model can’t run on present scenario, any policy and rules changes. So that model remains the same, just the world changes.

22. LoRA

LoRA stands for Low Rank Adaptation. It is a fine-tuning technique that trains only a small portion add-on layer of a large model.

High-level working of LoRA:

1. Freeze the original model

2. Insert small low-rank matrices in key layers

3. Train only those small parts called as the add-on layer

4. Combine them all

So it is the Same base model, New behaviour

Leave a Comment