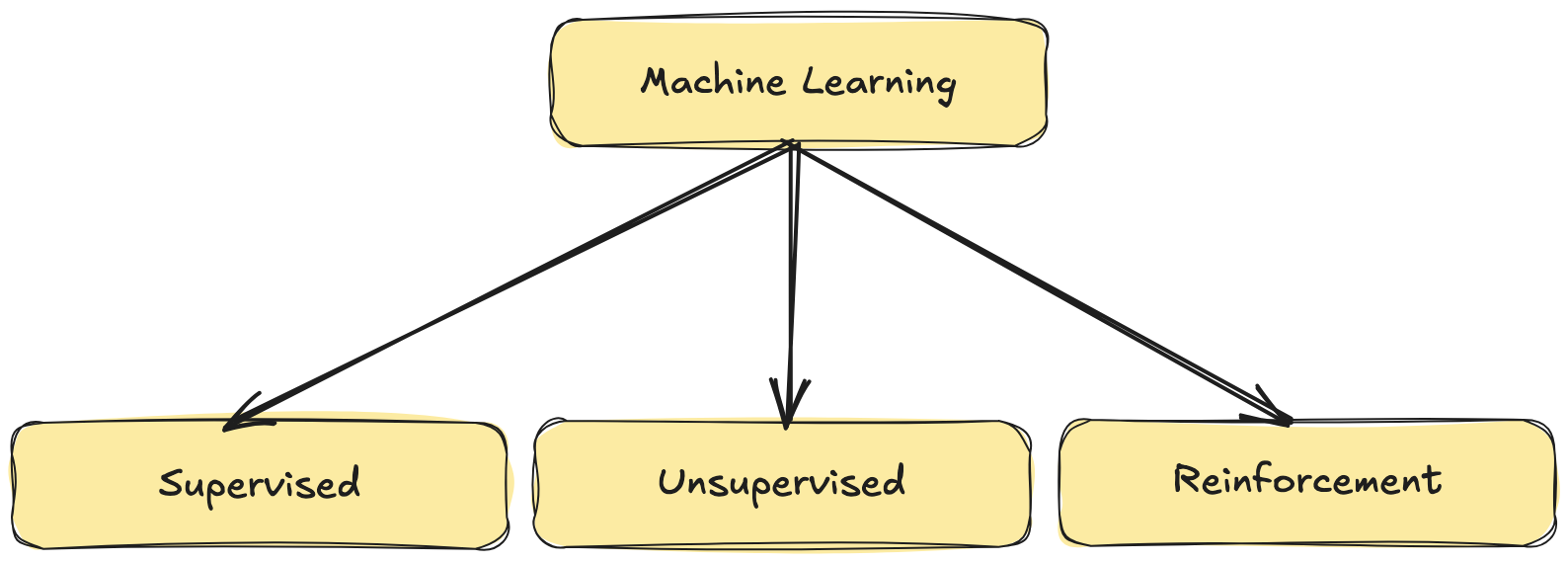

Machine Learning (ML) Types

It is mainly divided into 3 subtypes. We will understand one by one with a simple example.

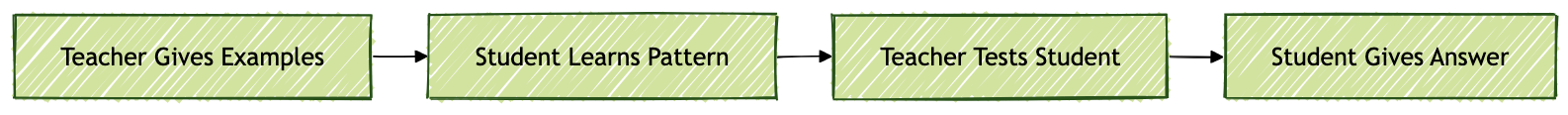

Supervised Learning

This is the most common use case of machine learning. Simply, it is like learning under the supervision of a teacher. The teacher gives an example, and the student analyses the pattern and memorises the concepts. With the help of this, now students will be able to solve the problems. So the teacher supervised the learning process.

In a similar way, we provide input objects and the desired output object to the machines. Provided that the dataset is labeled.

Labeled data means inputs are already tagged with the correct output.

There is a process of cleaning the data when the label is mentioned to wrong output. This takes substansial amount of time to clear out. When the clean data seems perfect, supervised learning will be ready to analyze. When the machine learns, the patterns are used that will produce a model that can be further exported and used.

Mapping function:Y = f(x)

where:

x = Input – features

Y = Output label/target value

f = Transformation/Mapping function

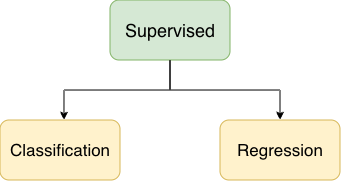

Task performed in supervised learning:

Classification

Whatever is predictable comes under classification. Let’s see various classification types:

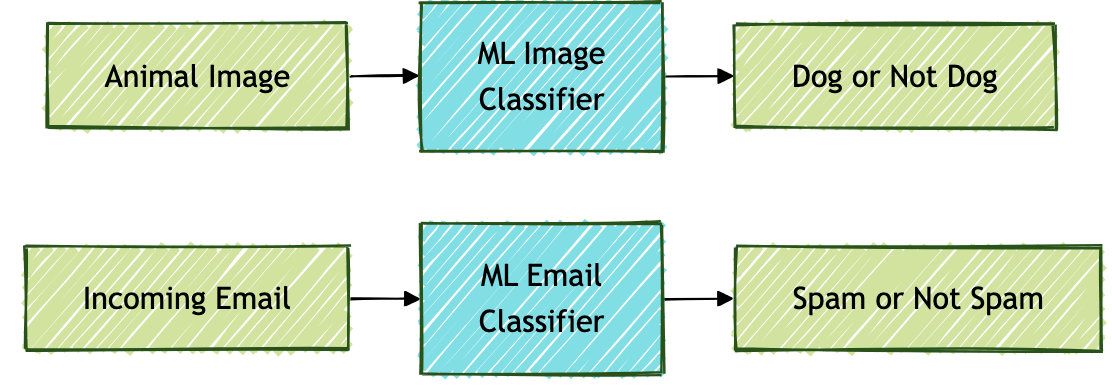

Binary Classification

In binary classification, images are provided with a label, such as whether this is a dog or not a dog.

Let’s take another example of Incoming mail, whether it’s spam or not.

These are the classical example of classification widely used across industries.

This is also considered a binary classification, which means decide from two classes.

Multiclass Classification

When we are able to predict among multiple classes instead of two.

In this example, ML needs to predict flower color when input is given to the ML Image Classifier, and it responds with the appropriate color output. Here are 4 classes from which the classifier will answer only a single class (color in this case).

We can build both of these classifications using shallow learning or using deep learning.

One of the common classifications used is SVMs (Support Vector Machines)

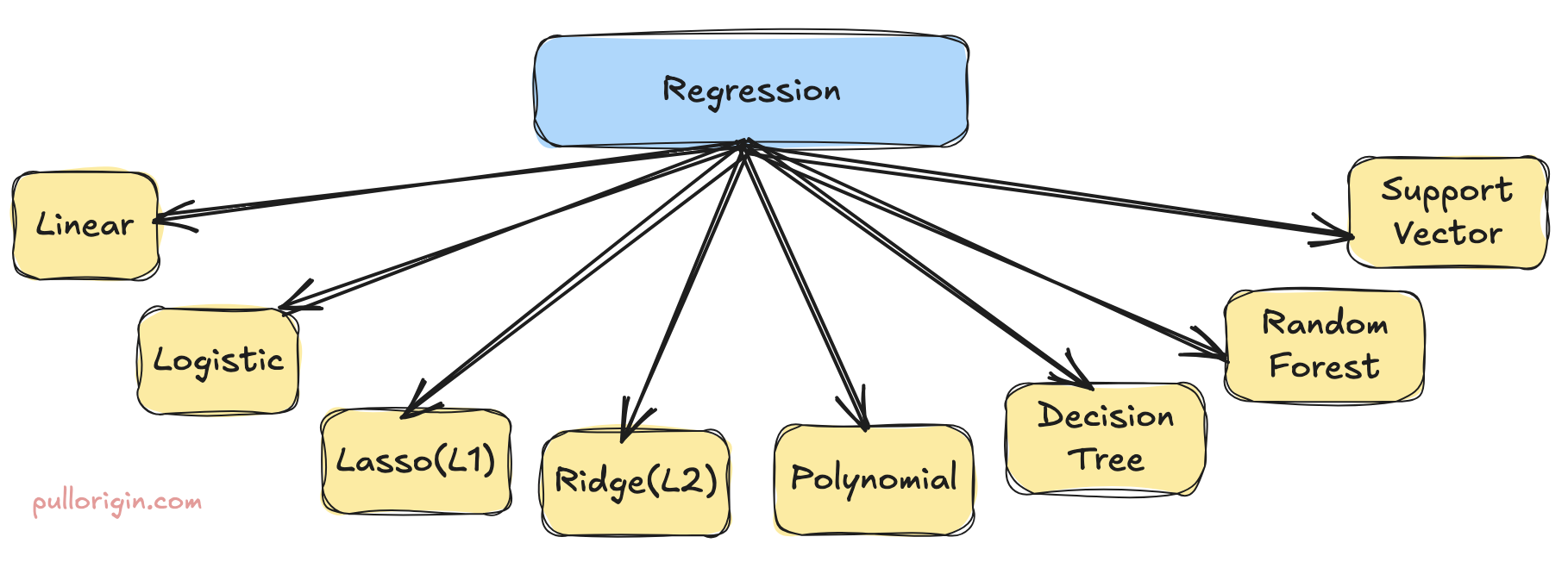

Regression

It is a straightforward method to predict value based on the historical data.

Regression predicts quantities rather than categories. So in short, it used to predict continues numerical values based on any input data. Let’s take an example:

House price based on size, location etc: $512,800

Tomorrow’s Temperature: 13.5°F

Forecasting sales next month: 2400

How the regression actually works?

- It takes labeled data of input + output(numeric)

- The model learns the pattern from the combination of input and output

- Learns a best-fit function

- Ready to predict values of new data

Here are the different types of regression, we will cover each one by one in simple language.

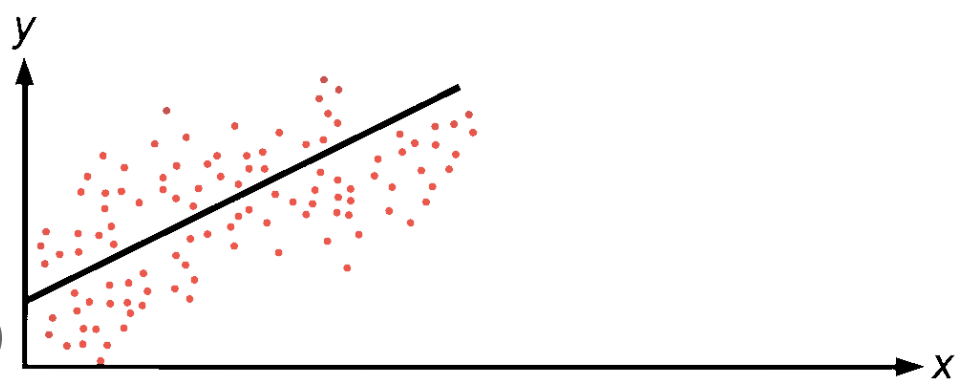

Linear regression

Linear regression is the commonly used method to predict output. Consider this as plotting the best straight line on the X-Y axis. It looks like this after plotting.

Let us take a popular example of house price prediction.

Bigger House -> Higher Price

Small House -> Lower Price

So linear regression predicts the best straight line based on House Size -> House Price

When to use? – Linear regression is used when we know the relation between variables. Here variable name is alternate to the column name if we talk about excel sheet.

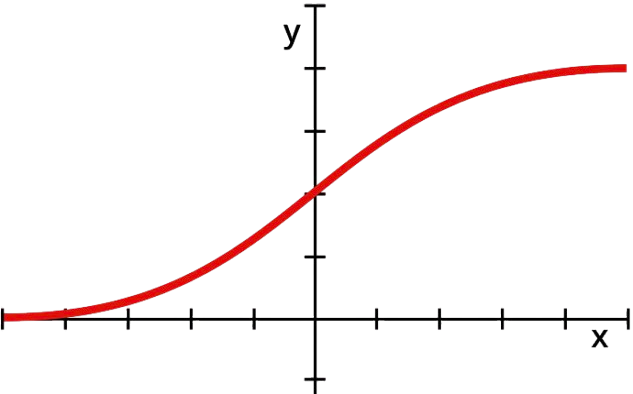

Logistic Regression

The name includes regression, but it works for classification. Not predicting as a continuous number.

Logistic regression uses a mathematical function known as the Sigmoid function, which creates a ‘S’ shaped curved. This ensures the value lies between 0 and 1. It can be 0.1, 0.8 or any value.

When to use?

When we want to decide between Yes/No.

Example: When looking for a credit score, loan be given or not? So this decides whether the loan applicant will be a default or a payback

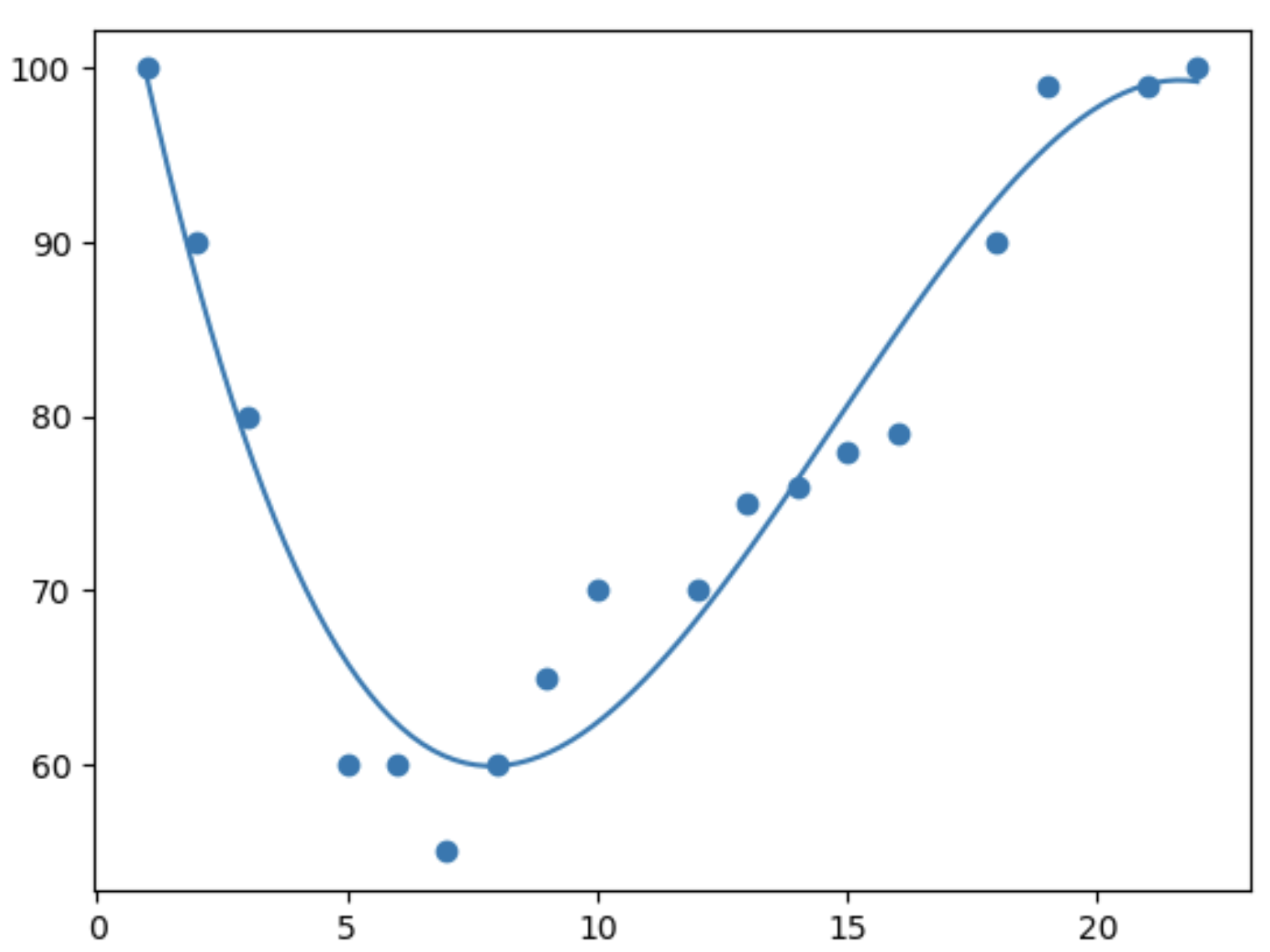

Polynomial Regression

Polynomial Regression is used when the relationship between input and output is a curve and not a straight line.

When to use and example:

Let us take an example: For low speed, fuel usage is low. For medium speed, fuel usage is efficient. For High speed, fuel usage increases a lot. So there are different values coming up for each scenario. Therefore Polynomial regression will be used here and not linear regression.

Lasso Regression (L1)

Lasso regression is also known as L1 Regularization. It is used to prevent overfitting. This function shrinks the value to zero. Lasso removes usage of input feature which are of no use or have less impact, keeping only those features that are more required and will have a high impact.

In short, useless ones are expelled.

Ridge Regression (L2)

Ridge regression is also known as L2 Regularization. It shrinks the coefficient of all variables toward zero, but it in actual never touches zero.

Example: If on the soundboard we are reducing background noise, but somehow our ears hear it. But it does not have much impact on our mainstream voice. So we kept the feature instead of removing it completely. So features like this will have less effect on the output.

Decision Tree Regression

Decision Tree Regression works in the way the human thinks. It breaks the problem into smaller parts and makes decisions. Let us take an example:

When we buy a house, do we think likeprice = ax + bNo. We think like:

Is the house big or small?

What’s the carpet area?

Is the house new or old?

A decision tree copies the same thinking process.

It asks questions, splits the data, and finally concludes with a number.

When to use?

When data is non-linear.

The need for prediction is rule-based.

Later on, want to use Random Forest/Gradient Boosting.

Random Forest Regression

Random Forest Regression is an ensemble learning technique that predicts a number by combining the predictions of many decision tree regressors.

In short, don’t trust one opinion. Ask many experts and take the average.

Imagine predicting house prices.

Instead of asking one property expert, you ask:

- One expert who focuses on location

- One on size

- One on age

- One on market trends

Each one gives a price estimate.

You average all answers → final price.

That’s exactly how Random Forest Regression works.

Support Vector Regression (SVR)

Support Vector Regression (SVR) is a supervised learning regression technique that predicts continuous values by finding a function that is as flat as possible, while allowing a small margin of error.

In simple words, SVR tries to fit a line (or curve) that most points stay close to, not necessarily touching every point.

Imagine a teacher checking student marks.

The teacher says:

“If your answer is within ±2 marks, I will accept it.”

- Errors within ±2 → ignored

- Errors outside → penalized heavily

SVR works exactly like this.

Use SVR when:

Non-linear relationship exists (Ex: Small change in input may cause a big change in output)

The dataset is small to medium (considered to be in ~50 to ~10,000)

Noise exists in data

High precision matters

Time Series Regression

Time Series Regression is used when your data is ordered by time, and the goal is to predict a future number using past values and time-related patterns.

Past values + time patterns → future prediction

In normal regression:

- Data rows are independent

- Order doesn’t matter

In time series regression:

- Order matters

- Today depends on yesterday

- Tomorrow depends on present day

When data is mixed, the meaning will be lost. Imagine a shopkeeper tracking daily sales.

- Monday → 100 units

- Tuesday → 120 units

- Wednesday → 140 units

Considering sales are not random. It follows a trend over time.

To predict Thursday’s sales, the owner will revisit the:

Past days, Weekly patterns, Seasonal effects

That prediction logic is time series regression.

Practical Applications of Supervised Learning

Healthcare: We usually give patients data to a computer with info like BP, Sugar level, Blood test report, age, weight, etc. Now the system checks from the old data which parameters impacted any other person, and what was the disease the person had. Now the same applies to the given patient. So the system might suggest:

High chance of BP in the next few years.

The X-rays show some symptoms of pneumonia.

What type of drug will suit the patient

In simple words, input is patient data and output is Yes/No, disease name, risk score in percentage. This will help doctor to decide what to do next.

Ecommerce & Retail: For this, user behaviour data on the website is provided as input, like browse, buy, click, search for, time spent on pages, past purchases, reviews, cart abandons, location, device, etc.

Model learns from millions of shoppers and then suggests, like on Amazon, we see “People who bought A also bought B”, Model also predicts like “This person is about to stop shopping here”, “Prices like this sell faster”.

E-commerce websites mainly use this to bump up the sales.

Unsupervised Learning

In unsupervised learning, we do not provide labeled data. We provide raw data and expect the system to explore patterns, similarities, and structures on its own.

Consider we have given a group of dogs and cats. And we want our system to provide whether it’s a dog or a cat. So our system will learn the structure of the dog and the cat separately, then create a cluster for each one. The next time an image occurs system can easily predict with the help of a learned pattern.

In practice, unsupervised learning is mainly used to group data, find hidden relationships, detect unusual behavior, anomaly detection. The model learns what the normal data looks like and what the one with different data looks like. This is helpful in fraud detection, cybersecurity and system monitoring.

In simple words, unsupervised learning allows machines to organize, observe and make sense of their own data.

Majorly Unsupervised learning categories are divided into 3 types:

- Clustering

Grouping similar kinds of patterns is known as clustering, as we have seen in the above example of a dog and a cat.

Methods used in clustering are: K-Means, Hierarchical Clustering, DBSCAN, OPTICS, Gaussian Mixture Model, and Mean Shift Clustering. - Dimensionality Reduction

It aims at simplifying the complex dataset by reducing the number of features, focusing more on relevant features, improving data processing efficiency, and mitigating the curse of dimensionality.

Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) are two methods that help in applying dimensionality reduction. - Association

Association is a fundamental technique to identify interesting relationships and patterns across large datasets. This technique is widely used in retail, healthcare and marketing to uncover the relation between multiple attributes/features.

Example we have seen in many e-commerce websites when we sees recommendation to buy butter if you already bought bread. This is highly correlated.

Same in Walmart, the products are placed one after the other with the help of their historical data that if product A is buyed 80% of users buy product B. So they keep product B along with A in order to increase their sales.

Reinforcement Learning (RL)

Reinforcement learning is completely different from supervised and unsupervised learning. It works with an agent that keeps track of the current environment and creates a policy to increase/maximize rewards over time.

The learning process of reinforcement involves exploration and exploitation.

Exploration – The agent explores different actions in the environment, keeps track of the behaviour in the environment and learns from it. Exploration tries to gather as much information from the environment.

Exploitation – In exploitation, the agent utilizes the existing knowledge of the environment in order to quickly maximize the rewards.

Reinforcement learning is commonly used in Robotics, Game Play and Autonomous vehicles.

Methods used in Reinforcement (RL) are: Q-learning, Deep Q-Networks (DQN) and Policy Gradient.

When to use what ML method?

This will be helpful at the the time of designing models.

Suppose you are sure that the data contains a label across all fields in the output. Then go for Supervised. If there is no labeled data in the dataset, go for Unsupervised.

If your output field contains a category name, then go for Classification.

If some number is present in the output, go for Regression.

If your data is extremely complex, which can’t be easily understood by humans or which takes a lot of computations, then go for Deep Learning/Neural Networks. If not the case, go for Classical ML methods like Decision Tree, Logistic Regression.

Leave a Comment